Getting Started on the Testnet

The Graph Protocol Testnet Docker Compose.

This tutorial goes hand-in-hand with the video guide by Indexer_Payne.

1. Testnet Docker Compose.

The following is a monitoring solution for hosting a Graph Node on a single Docker host with Prometheus, Grafana, cAdvisor, NodeExporter and alerting with AlertManager.

The monitoring configuration adapted the K8S template by the graph team in the mission control repository during the testnet, and later adapted for the new testnet release using this configuration.

The advantage of using Docker, as opposed to systemd bare-metal setups, is that Docker is easy to manipulate around and scale up if needed. We personally ran the whole testnet infrastructure on the same machine, including a TurboGeth Archive Node (not included in this docker build).

For those that consider running their infras like we did, here are our observations regarding the necessary hardware specs:

The good thing about Docker, is that the data is stored in named volumes on the docker host and can be exported / copied over to a bigger machine once more performance is needed.

Note that you need access to an Ethereum Archive Node that supports EIP-1898. The setup for the archive node is not included in this docker setup.

The minimum configuration should to be the CPX51 VPS at Hetzner.

2. Ethereum Archive Node Specs.

| Minimum Specs | Recommended Specs | Maxed out Specs | |

|---|---|---|---|

| CPUs | 16 vcore | 32 vcore | 64 vcore |

| RAM | 32 GB | 64 GB | 128 GB |

| Storage | 1.5 TB SATA SSD | 7 TB NVME | 7 TB NVME RAID 10 |

Archive node options

| Self-hosted | Trace API | Stable | EIP-1898 | Min Disk Size |

|---|---|---|---|---|

| OpenEthereum 3.0.x | yes ✔️ | no ⚠️ | yes ✔️ | 7 TB |

| OpenEthereum 3.1 | yes ✔️ | no ⚠️ | no ☠️ | 7 TB |

| Parity 2.5.13 | yes ✔️ | yes ✔️ | no ☠️ | 7 TB |

| GETH | no ⚠️ | yes ✔️ | yes ✔️ | 7 TB |

| TurboGETH | no ⚠️ | no ⚠️ | yes ✔️ | 1.5 TB |

Service Providers (WIP)

3. Graph Protocol Infrastructure Specs.

These are the specs for The Graph Protocol infrastructure:

| Minimum Specs | Recommended Specs | Maxed out Specs | |

|---|---|---|---|

| CPUs | 16 vcore | 64 vcore | 128 vcore |

| RAM | 32 GB | 128 GB | 256/512 GB |

| Storage | 300 GB SATA SSD | 2 TB NVME | 4 TB NVME RAID 10 |

The specs/requirements listed here come from our own experience during the testnet.

Your mileage may vary, so take this with a grain of salt and be ready to upgrade. :) Ideally, they need to scale up proportional with your stake in the protocol.

4. Installation

A) Prerequisites

On a fresh Ubuntu server login via ssh and execute the following commands:

B) Install from scratch

Run the following commands to clone the repository and set everything up:

C) Install or Update the Agora and Qlog modules

To update those repos to the latest version just do the following command occasionally.

To use qlog or agora execute the runqlog or runagora scripts in the root of the repository.

This will use the compiled qlog tool and extract queries since yesterday or 5 hours ago and store them to the query-logs folder.

To make journaled logs persistent across restarts you need to create a folder for the logs to store in like this:

That’s all.

5. Get a domain.

To enable SSL on your host you should get a domain. You can use any domain and any regsitrar that allowes you to edit DNS records to point subdomains to your IP address.

For a free option go to myFreenom and find a free domain name. Create a account and complete the registration. In the last step choose “use dns” and enter the IP address of your server. You can choose up to 12 months for free.

Under “Service > My Domains > Manage Domain > Manage Freenom DNS” you can add more subdomains later.

Create 3 subdomains, named as follows:

6. Create a mnemonic.

You need a wallet with a seed phrase that is registered as your operator wallet. This wallet will be the one that makes transactions on behalf of your main wallet (which holds and stakes the GRT). The operator wallet has limited functionality, and it’s recommended to be used for security reasons.

To make yourself a mnemonic eth wallet you can go to this website and just press generate. You get a seed phrase in the input field labeled BIP39 Mnemonic. Scroll down a bit and find the select field labeled Coin. Select ETH as network in the dropdown and you find your address, public key and private key in the first row of the table if you scroll down the page in the section with the heading “Derived Addresses”. You can import the wallet using the private key into Metamask.

7. Run.

In the root of the repo, create (or edit, it’s already there) a file called `start` and insert the following lines in it:

To start the software, just do bash start

To find out the GEO_COORDINATES you can search for an ip location website and check your server exact coordinates.

`JSON_STRING` do not modify this, leave as it is.

In case something goes wrong try to add --force-recreate at the end of the command, eg.: bash start --force-recreate.

Containers:

- Graph Node (query node)

`https://query.sld.tld` - Graph Node (index node)

`https://index.sld.tld` - Indexer Agent

- Indexer Service

- Indexer CLI

- Postgres Database for the index/query nodes

- Postgres Database for the agent/service nodes

- Prometheus (metrics database)

http://:9090 - Prometheus-Pushgateway (push acceptor for ephemeral and batch jobs)

http://:9091 - AlertManager (alerts management)

http://:9093 - Grafana (visualize metrics)

http://:3000 - NodeExporter (host metrics collector)

- cAdvisor (containers metrics collector)

- Caddy (reverse proxy and basic auth provider for prometheus and alertmanager)

8. Updates and Upgrades.

The general procedure is the following:

This will update the scripts from the repository.

To upgrade the containers:

To update Agora or Qlog repos to the latest version just do the following command occasionally:

To use qlog or agora execute the runqlog or runagora scripts in the root of the repository.

9. Managing Allocations.

Next, we can have a look at the instructions on how you can manage your allocations.

- To control the allocations, we will use the indexer-cli

- To check our allocations, we will use the indexer-cli, other community-made tools, and later on, the Graph Explorer

A) How to check your allocations

Assuming you already have the graph-cli and indexer-cli installed, in the root of the directory, type:

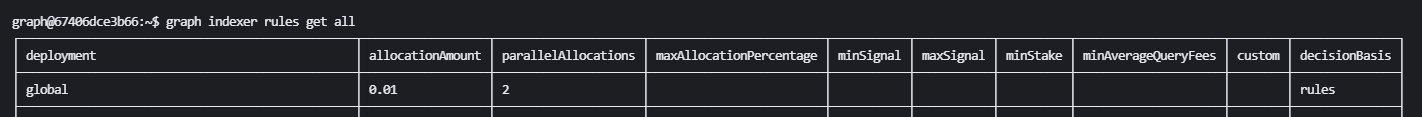

You will be greeted by this table. By default, it’s set to these values below:

What do the table columns mean?

deployment– can be either global, or an IPFS hash of a subgraph of your choiceallocationAmount– refers to the GRT allocation that you want to set, either globally or for a specific subgraph, depending on your preferenceparallelAllocations– influence how many state channels the gateways open with you for a deployment, and this in turn affects the max query request rate you can receiveminSignal– conditional decision basis ruled by the minimum Subgraph SignalmaxSignal– conditional decision basis ruled by the maximum Subgraph SignalminStake– conditional decision basis ruled by the minimum Subgraph StakeminAverageQueryFees– conditional decision basis ruled by the minimum average of query feesdecisionBasis– dictates the behavior of your rules

General caveats

Your total stake of a specific subgraph, or globally, will be calculated as follows:

Example:

decisionBasis can be of three types: always , never and rules

decisionBasis always overrides the conditional decision basis rules that you might have set (minStake, minSignal, etc) and will ensure that your allocation is always active

decisionBasis never same as above, only that it will ensure that your allocation is always inactive

decisionBasis rules will give you the option of using the conditional decision basis

global rules will have, by default, an allocationAmount of 0.01 GRT and parallelAllocations set to 2

This means that by default, every time you set an allocationAmount of a specific subgraph, it will inherit parallelAllocations 2 rule from global.

To see the global rules merged into the rest of your allocations table, you can use the following command:

B) How to set allocations?

Examples:

1.

Assuming the {IPFS_HASH} exists on-chain, this will set an allocationAmount of 1000 GRT and will ensure that the subgraph will always be allocated, through decisionBasis always

2.

This command will enable you to automatically allocate 100 GRT x 10 parallelAllocations to all the subgraphs that exist on-chain, with 10 parallel allocations each

3.

This command will enable you to use the decision basis conditional rules of minSignal and maxSignal. The subgraph will only get allocated by the Agent IF the network participants have a minimum of 100 signal strength and a maximum of 200 signal strength.

Generally speaking, you’ll be good to just use either the first command or the second one, as they’re not complicated to understand. Just be aware of the parallelAllocations number.

C) How to verify your allocations?

We can use the following command(s) with the indexer-cli:

This will only display the subgraph-specific allocation rules

This will display the full rules table

This will display the full rules table with the global values merged

D) What happens after you set your allocations?

The indexer-agent will now start to allocate the amount of GRT that you specified for each subgraph that it finds to be present on-chain.

Depending on how many subgraphs you allocated towards, it will take time for this action to finish.

Keep in mind that the indexer-agent once given the instructions to allocate, it will throw everything in a queue of transactions that you will not be able to close. For example, if you set global always then immediately after, you decide to set global rules or never it will do a full set of transactions for global always then go around and deallocate from them with your second transactions. This means that you will likely be facing a lot of delay between the input time and until the actions have finalized on-chain.

A workaround for this is to restart the indexer-agent app/container, as this will reset his internal queue managing system and start with the most fresh data that it has.

Another workaround is to either delete your rules with graph indexer rules delete {IPFS}

10. Managing cost models.

A) What are cost models?

Cost models are indexer tools that they can use in order to set a price for the data that they serve.

You cannot earn GRT for the queries that you serve without a cost model.

The cost models are denominated in decimal GRT.

Cost models can have two parts:

- The model — should contain the queries that you want to price

- The variables — should contain the variables that the queries use

You can either have a static, simple cost model, or you can dive into complicated cost models based on your database access times for different queries that you serve across different subgraphs, etc.

The decision here is totally up to you 🙂

B) How does a model look like?

The easiest cost model you can set, can look something like this:

Example — you’re serving every query at 0.01 GRT / query

C) How do the variables look like?

11. Debugging and troubleshooting.

First off, I strongly recommend having either graph-pino or pino-pretty installed for this, along with Grafana or something else to collect the data scraped by Prometheus and be easily accessible at a glance.

You will need NPM installed for this.

To install graph-pino, you can simply do:

To install pino-pretty, you can simply do:

And you’re done. These two will greatly help you read through the indexer-agent and indexer-service logs to easily find errors and warning messages that might occur.

To use them, you can use the following examples:

Graph-pino with Docker

Graph-pino with Journald

Pino-pretty with Docker

Pino-pretty with Journald

12. Troubleshooting scenarios.

The easiest cost model you can set, can look something like this: